Mastering Text Preprocessing in NLP: A Practical Guide with Code & Real-World Use Cases

💡 Highly recommended: For a hands-on learning experience, explore the highly detailed text preprocessing notebook Jupyter Notebook on GitHub or Jupyter Notebook on Kaggle — it includes modular code, visual outputs, and reusable functions. It took 10 days of focused effort to create — packed with simple, beginner-friendly code and helpful visuals. Just plug in your text and watch the magic happen!

Natural Language Processing (NLP) is one of the most transformative fields in AI today. From chatbots to search engines and sentiment analysis tools, NLP allows machines to understand and generate human language. But before we dive into fancy models like BERT or GPT, there's one essential foundation we can't ignore: text preprocessing.

Preprocessing transforms raw, noisy text into a clean and normalized form that machines can work with. Think of it as preparing ingredients before cooking — the better the prep, the better the outcome.

In this blog, I’ll walk you through every important text preprocessing step, explain why each one matters, show you real-world examples, and provide Python code (using NLTK & spaCy) for each.

👁️ Why Is Text Preprocessing Important?

Raw text from sources like web pages, tweets, reviews, or chat logs is full of noise: inconsistent casing, typos, emojis, HTML tags, URLs, slang, and more. If you feed this directly into an NLP model, it might learn meaningless patterns or perform poorly.

Preprocessing helps with:

- Improving model accuracy

- Reducing vocabulary size

- Increasing training speed

- Enhancing generalization

- Making features meaningful

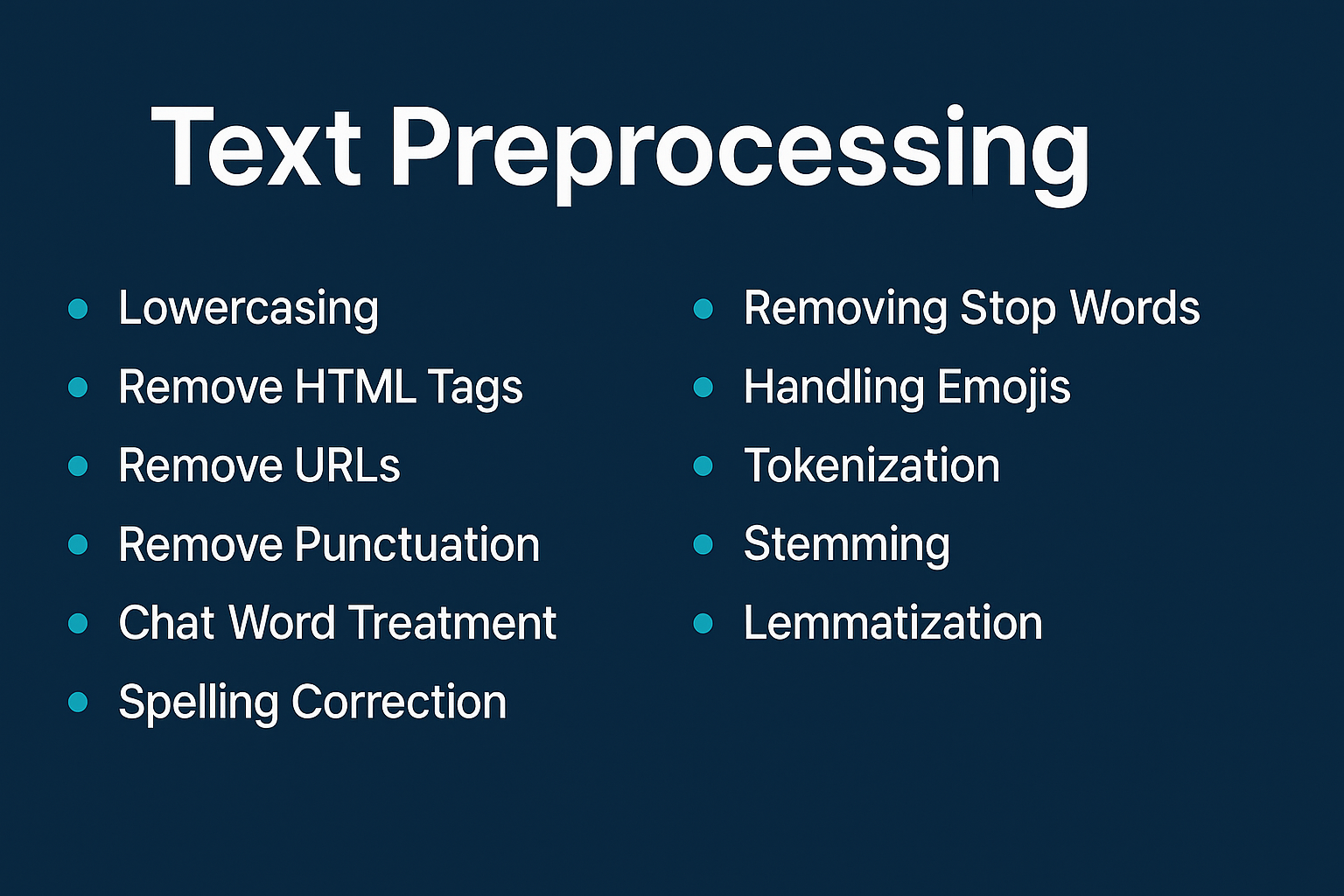

🔢 The Complete Text Preprocessing Pipeline

💡 Why spaCy? We leverage spaCy over NLTK for tokenization and stopword removal due to its advanced linguistic capabilities, faster performance, and robust support for modern NLP tasks, making it ideal for scalable and accurate preprocessing.

Below are the steps I followed, each explained with code, rationale, and real-world examples.

1. Lowercasing

Why? To treat "Apple" and "apple" as the same word. It reduces vocabulary size and inconsistencies.

text = "Apple is looking at buying a startup."

processed_text = text.lower()

print("Original:", text)

print("\nProcessed:", processed_text)

# Original: Apple is looking at buying a startup.

# Processed: apple is looking at buying a startup.

Use case: Email classification, sentiment analysis

2. Removing HTML Tags

Why? Scraped data often contains HTML <div>, <p>, <a> etc tags. These are useless for language understanding.

import re

text = " <p> Welcome to NLP! <p>"

processed_text = re.sub(r'<.*?>', '', text)

print("Original:", text)

print("\nProcessed:", processed_text.strip())

# Original: <p> Welcome to NLP! </p>

# Processed: Welcome to NLP!

Use case: Web scraping, product reviews

3. Removing URLs

Why? Links rarely add semantic meaning and can distract models.

import re

text = "Check out https://example.com for more info."

processed_text = re.sub(r'http\S+|www\.\S+', '', text)

print("Original:", text)

print("\nProcessed:", processed_text)

# Original: Check out https://example.com for more info.

# Processed: Check out for more info.

Use case: Twitter sentiment analysis, customer feedback

4. Removing Punctuation

Why? Punctuation may not be useful in some NLP tasks like classification.

import re

text = "Hello!!! How are you???"

processed_text = re.sub(r'[^\w\s]', '', text)

print("Original:", text)

print("\nProcessed:", processed_text)

# Original: Hello!!! How are you???

# Processed: Hello How are you

Use case: Spam detection, topic classification

5. Handling Emojis

Why? Emojis can carry semantic meaning (e.g., sentiment) and should be converted to text for models to process.

import emoji

text = "I love NLP! 😍"

processed_text = emoji.demojize(text)

print("Original:", text)

print("\nProcessed:", processed_text)

# Original: I love NLP! 😍

# Processed: I love NLP! :heart_eyes:

Use case: Product reviews, social media mining

6. Slang & Chat Abbreviations Handling

Why? "LOL" -> "laughing out loud". Improves understanding.

Use a comprehensive slang words CSV by converting it into a Python dictionary and applying it to your text data Slang Words

import spacy

nlp = spacy.load("en_core_web_sm")

slangs = {"brb": "be right back", "ttyl": "talk to you later"}

text = "brb, ttyl!"

doc = nlp(text)

processed_text = ' '.join([slangs.get(token.text.lower(), token.text) for token in doc])

print("Original:", text)

print("\nProcessed:", processed_text)

# Original: brb, ttyl!

# Processed: be right back , talk to you later !

Use case: Social media or chatbot training data

7. Spelling Correction

Why? Corrects typos that models might otherwise treat as unique tokens.

from textblob import TextBlob

text = "I havv good speling"

processed_text = str(TextBlob(text).correct())

print("Original:", text)

print("\nProcessed:", processed_text)

# Original: I havv good speling

# Processed: I have good spelling

Use case: Email parsing, resume screening

8. Stopword Removal

Why? Removes words like "is", "the", "a" that may not help classification tasks.

import spacy

nlp = spacy.load("en_core_web_sm")

text = "This is an example."

doc = nlp(text)

processed_text = ' '.join([token.text for token in doc if not token.is_stop])

print("Original:", text)

print("\nProcessed:", processed_text)

# Original: This is an example.

# Processed: example

Use case: Text classification, topic modeling

9. Tokenization

Why? Converts text into individual tokens (words or subwords).

import spacy

nlp = spacy.load("en_core_web_sm")

text = "NLP is awesome!"

doc = nlp(text)

processed_text = [token.text for token in doc]

print("Original:", text)

print("\nProcessed:", processed_text)

# Original: NLP is awesome!

# Processed: ['NLP', 'is', 'awesome', '!']

Use case: All NLP pipelines, embedding generation

10. Stemming

Why? Reduces words to their root forms (may not be real words).

from nltk.stem import PorterStemmer

import spacy

text = "The children were running."

nlp = spacy.load("en_core_web_sm")

doc = nlp(text)

processed_text = [porter.stem(token.text) for token in doc]

print("Original:", text)

print("\nProcessed:", processed_text)

# Original: The children were running.

# Processed: ['the', 'children', 'were', 'run', '.']

Use case: Search engines, indexing

11. Lemmatization

Why? Like stemming, but returns real dictionary words using grammar.

import spacy

nlp = spacy.load("en_core_web_sm")

text = "The children were running."

doc = nlp(text)

processed_text = [token.lemma_ for token in doc]

print("Original:", text)

print("\nProcessed:", processed_text)

# Original: The children were running.

# Processed: ['the', 'child', 'be', 'run', '.']

Use case: Text classification, translation, QA systems

📚 Final Thoughts

Preprocessing isn't just "cleaning" — it's an essential NLP engineering skill that can determine the success or failure of your models. A well-preprocessed dataset helps extract meaningful patterns, reduces errors, and speeds up your workflow.

This guide gives you everything you need to build your own basic preprocessing pipeline — and think critically about what steps are necessary for your specific NLP problem.

Stay tuned as I continue this journey — up next: Named Entity Recognition (NER), Vectorization, and Transformers!

✨ "Good models start with great preprocessing."